Gumroad – Blender LiveLinkFace v0.0.5 Addon FREE 2024 Download

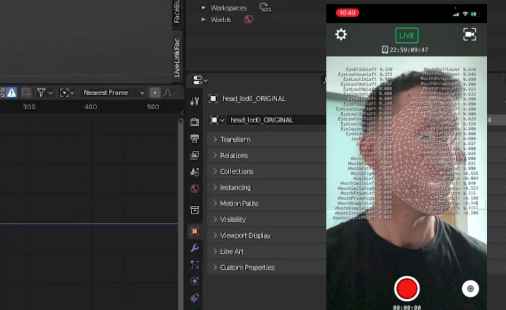

This is an add-on for Blender to stream ARKit blendshapes live from the iPhone LiveLinkFace app to any mesh with matching shape keys and/or bones. The add-on also allows you to import recorded animations from a LiveLinkFace-formatted CSV file.

Requires:

- an iOS device with a TrueDepth camera (iPhone X or higher)

- Live Link Face on the App Store (apple.com)

- Blender 3.1.0 or higher (earlier versions may work but this is untested)

- your own mesh rigged with ARKit shape keys/armatures.

If you do not have your own mesh, you can download one from the following link:

MetaHuman Head – 52 blendshapes – ARkit ready (Free) (gumroad.com)

(though be aware that this mesh is not rigged for head pitch/yaw/roll, so those ARKit blendshapes will obviously not function).

IMPORTANT – this is an unofficial add-on and I am not associated with LiveLinkFace, Unreal Engine or Epic.

Start Blender and open a file containing an object rigged with ARKit shapekeys

3) Click Edit->Preferences, then Add-ons in the left pane

4) Click “Install”, then select the file you downloaded in (1) and click “Install Add-on”

5) Click the checkbox next to “3D View: LiveLinkFace Add-on” to enable the add-on and close the Preferences panel

6) In the 3D view for your scene, select the “LiveLinkFace” tab on the right

7) Select the mesh you want to animate, then click the + button in the “Target” box. The name of your mesh must appear here; if this is empty, nothing will be animated.

Streaming

1) Start the LiveLinkFace app on your iPhone and click the Settings icon in the top-left

2) Tap the “Live Link” row, and if the IP adddress of your host machine (the one that you are running Blender and the LiveLinkFace add-on) does not appear under “Targets”, tap “Add Target” and add the IP address.

3) Back in Blender, most people should be able to leave the Host IP/Port as the defaults (0.0.0.0/11111). If this does not work, set the IP address and the port to the exact values you specified in the LiveLinkFace app.

4) IMPORTANT – make sure that “Protocol” is set to “4.25 and Later”.

5) Click “Connect”, and you should see your mesh in the 3D preview sync with the LiveLinkFace stream.

CSV Import

1) When you record a session via LinkLinkFace, a CSV is saved alongside the raw video in your phone.

2) This CSV can be imported into Blender by clicking the “Load from CSV” button and selecting the file. Ensure that the correct target mesh is selected (step 7 under “General” above).

3) Two new actions will be created (one for shape keys, one for bones) to animate the target mesh based on the values in the CSV. You may need to change the source framerate for your Blender project to match the framerate of the LiveLinkFace app (60fps).

Bone animations

Some LiveLinkFace/ARKit blendshapes may not correspond directly to shape keys on your mesh, but rather to bone rotations (e.g. HeadPitch/HeadYaw/HeadRoll).

One option for matching the two is to create a custom property on your target mesh that matches the name of the ARKit value (e.g. “HeadPitch”), then create a driver for the bone (e.g. “neck”) that matches the orientation you wish to animate. Streaming to the plugin or loading from CSV will then animate the bone orientations according to the range (if you choose to create a driver using quaternions, you can probably use the ARkit value directly, since -1/1 for the ARkit values will correspond to a -90/90 degree rotation on the bone).

Post Comment